CSCI 331 Review 2

Mon Nov 04 2019

1 Ch 4: Iterative improvement

1.1 Simulated annealing

Idea: escape local maxima by allowing some bad moves but gradually decrease their size and frequency. This is similar to gradient descent. Idea comes from making glass where you start very hot and then slowly cool down the temperature.

1.2 Beam search

Idea: keep k states instead of 1; choose top k of their successors.

Problem: quite often all k states end up on same local hill. This can somewhat be overcome by randomly choosing k states but, favoring the good ones.

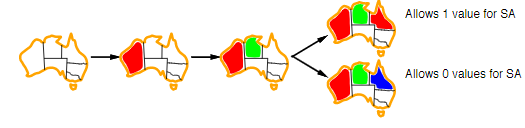

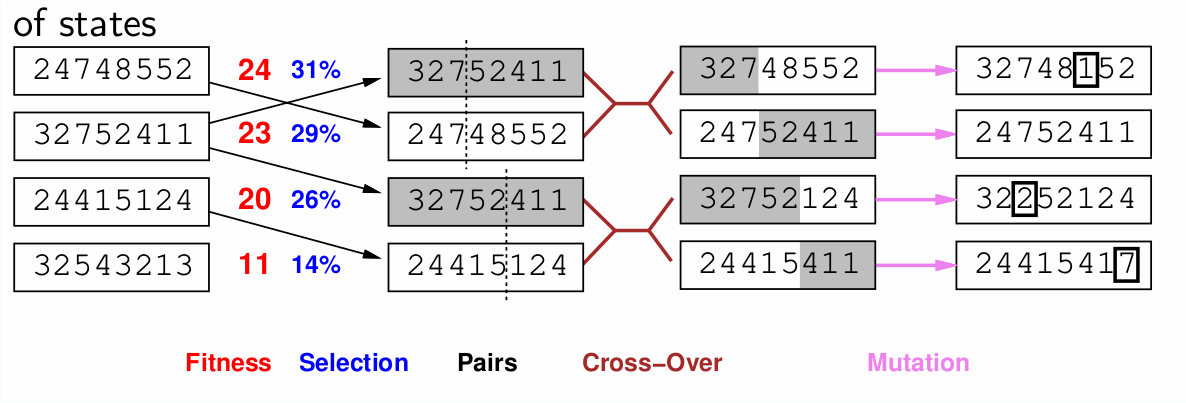

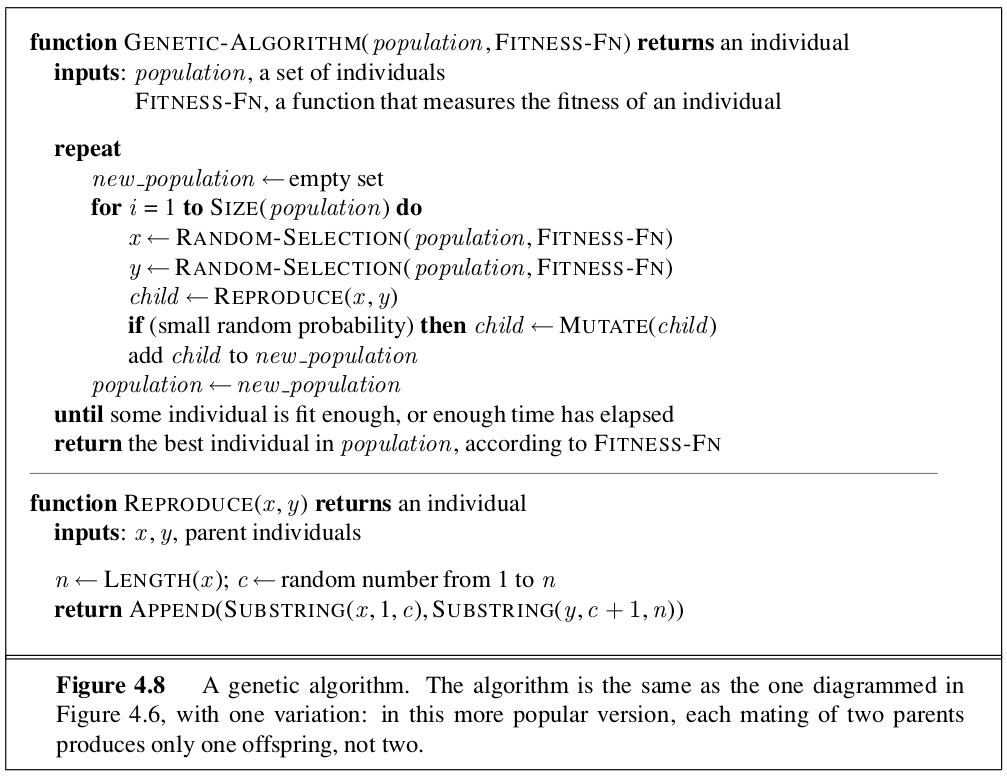

1.3 Genetic algorithms

Inspired by Charles Darwin’s theory of evolution. The algorithm is an extension of local beam search with successors generated from pairs of individuals rather than a successor function.

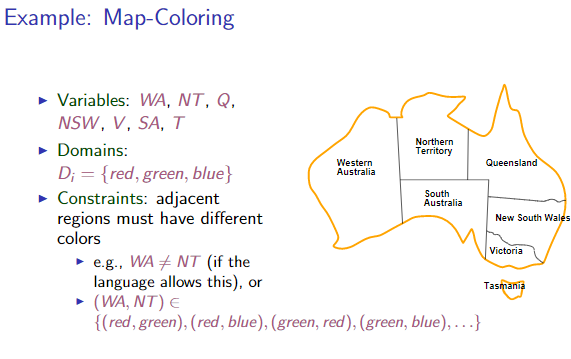

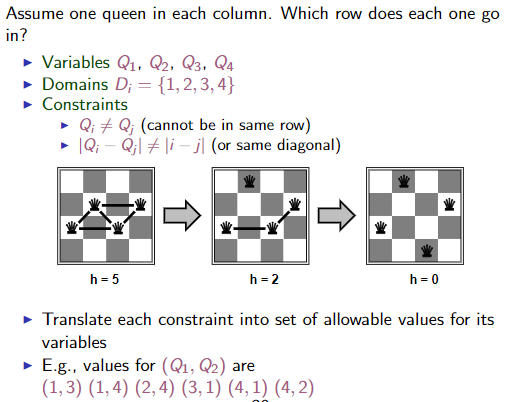

2 Ch 6: Constraint satisfaction problems

Ex CSP problems:

- assignment

- timetabling

- hardware configuration

- spreadsheets

- factory scheduling

- Floor-planning

2.1 Problem formulation

2.1.1 Variables

Elements in the problem.

2.1.2 Domains

Possible values from domain \(D_i\), try to be mathematical when formulating.

2.1.3 Constraints

Constraints on the variables specifying what values from the domain they may have.

Types of constraints:

- Unary: Constraints involving single variable

- Binary: Constraints involving pairs of variables

- Higher-order: Constraints involving 3 or more variables

- Preferences: Where you favor one value in the domain more than another. This is mostly used for constrained optimization problems.

2.2 Constraint graphs

Nodes in graph are variables, arcs show constraints

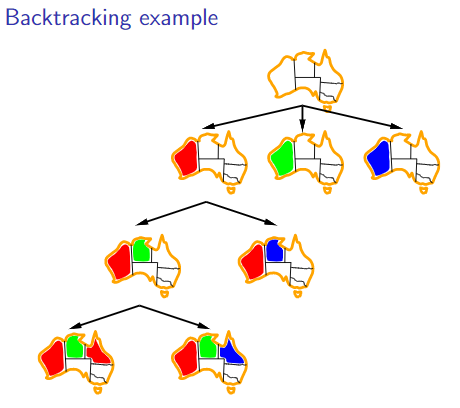

2.3 Backtracking

2.3.1 Minimum remaining value

Choose the variable wit the fewest legal values left.

2.3.2 Degree heuristic

Tie-breaker for minimum remaining value heuristic. Choose the variable with the most constraints on remaining variables.

2.3.3 Least constraining value

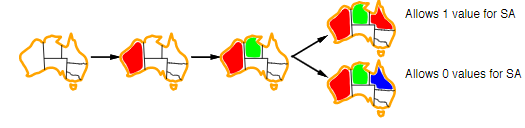

Choose the least constraining value: one that rules out fewest values in remaining variables.

2.3.4 Forward checking

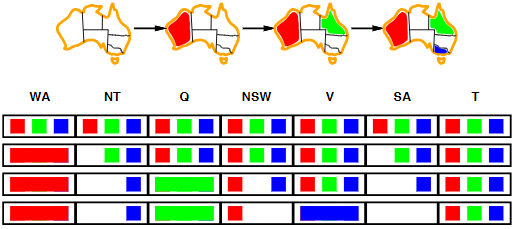

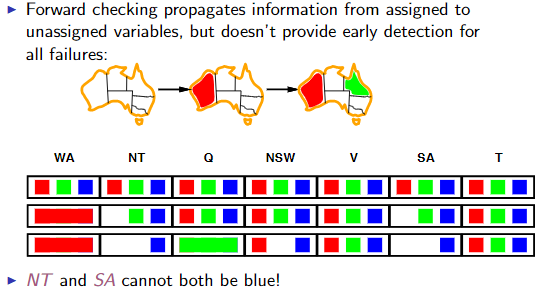

Keep track of remaining legal values for unassigned variables and terminate search when any variable has no legal values left. This will help reduce how many nodes in the tree you have to expand.

2.3.5 Constraint propagation

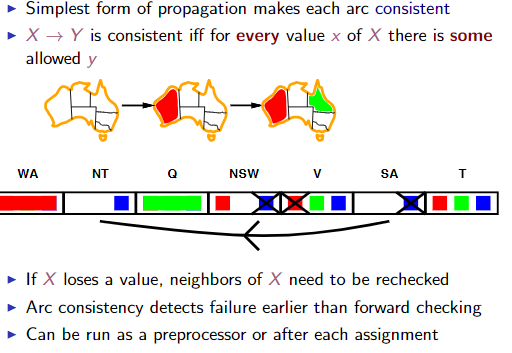

2.3.6 Arc consistency

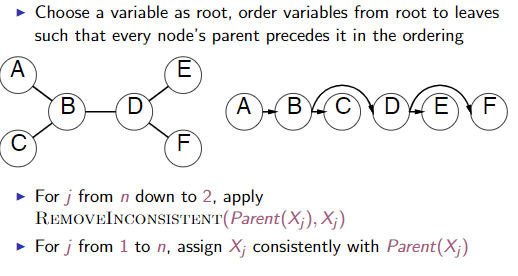

2.3.7 Tree structured CSPs

Theorem: if constraint graph has no loops, the CSP ca be solved in \(O(n*d^2)\) time. General CSP is \(O(d^n)\)

2.4 Connections to tree search, iterative improvement

To apply this to hill-climbing, you select any conflicted variable and then use a min-conflicts heuristic to choose a value that violates the fewest constraints.

3 CH 13: Uncertainty

3.1 Basic theory and terminology

3.1.1 Probability space

The probability space \(\Omega\) is all possible outcomes. A dice roll has 6 possible outcomes.

3.1.2 Atomic Event

An atomic event w is a single element from the probability space. \(w \in \Omega\) Ex: rolling a dice of 4 The probability of w is between [0,1].

3.1.3 Event

An event A is any subset of the probability space \(\Omega\) The probability of an event is the sum of the probabilities of the atom events in the event.

Ex: probability of rolling a even number dice is 1/2.

P(die roll odd) = P(1)+P(2)+3P(5) = 1/6+1/6+1/6 = 1/23.1.4 Random variable

Is a function from some sample points to some range. eg reals or booleans. eg: P(Even = true)

3.2 Prior probability

Probabilities based given one or more events. Ex: probability cloudy and fall = 0.72.

Given two variables with two possible assignments, we could represent all the information in a 2x2 matrix.

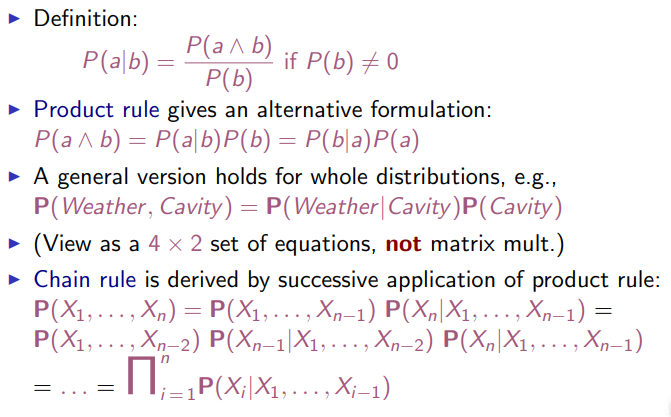

3.3 Conditional Probability

Probabilities based within a event. Eg: P(tired | monday) = .9.

3.4 Bayes rule

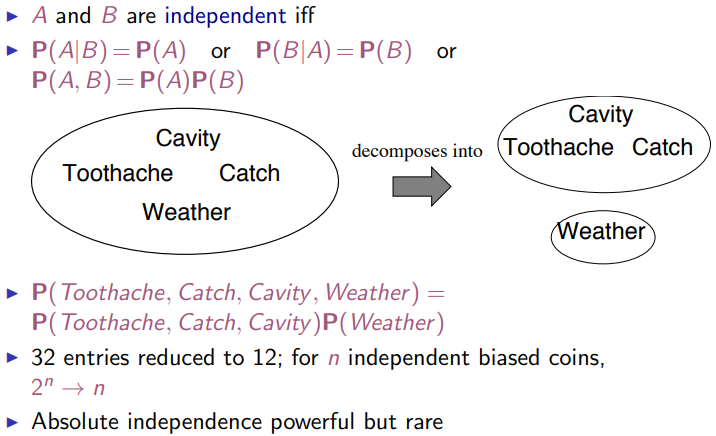

3.5 Independence

Recent Posts

The Data Spotify Collected On Me Over Ten YearsVisualizing Fitbit GPS Data

Running a Minecraft Server With Docker

DIY Video Hosting Server

Running Scala Code in Docker

Quadtree Animations with Matplotlib

2020 in Review

Segmenting Images With Quadtrees

Implementing a Quadtree in Python

Parallel Java Performance Overview